Analysis of Sensor Technology in Autonomous Mobile Robots

2025-11-06 11:41:00 1533

Autonomous mobile robots (AMRs) are increasingly deployed across numerous industries to streamline operational workflows, liberating personnel from tedious repetitive tasks and enabling them to apply their expertise to more intellectually demanding challenges. A pivotal mechanism underpinning the autonomous operation of such technology lies in its capacity for real-time environmental perception and decision-making in response to localised environmental changes.

This principle equally applies to autonomous mobile robots. However, as AMRs navigate freely within facilities and their movements and behaviours depend on dynamic environments, multiple sensing and monitoring technologies must work in concert. The diverse sensors integrated within AMRs enable the robots to move through facilities, avoid obstacles, identify loads requiring pickup and transport, and complete tasks with a high degree of safety. This article explores the various sensor systems that ensure AMRs operate as intended.

Cameras/Visual Sensors: The Eyes of the AMR

Vision sensors and cameras rank among the most critical sensors on AMRs, as they emulate the human visual system, enabling the robot to ‘see’ its immediate surroundings (and any new environments it enters). These vision sensors capture, perceive, and analyse light within the AMR's local environment, converting it into digital signals that can be analysed and processed. This allows the AMR to make informed decisions based on what it ‘sees’ – a process analogous to human visual cognition.

Unlike other sensors employed in AMRs, visual sensors can construct images of their immediate surroundings, thereby recognising intricate environmental features. The captured imagery boasts high resolution and can also record video for object identification and detection (such as hazardous materials or loads requiring transport). Certain visual cameras incorporate artificial intelligence (AI)-driven computer vision capabilities, enabling AMRs to recognise signage, comprehend visual cues, and execute tasks demanding heightened levels of intelligence.

Given the significance of visual cameras, multiple types are employed in AMRs:

Monocular cameras: These operate similarly to conventional cameras, where light enters through an aperture and is projected onto an imaging plane (e.g., a charge-coupled device). Distortions within the image are eliminated and pixels aligned, generating a two-dimensional image resembling human visual perception. Light field cameras are based on monocular designs but incorporate additional microlens arrays to deliver more precise depth information.

Stereo cameras: These sensor systems comprise two monocular cameras, enabling more realistic simulation of human vision through dual ‘eyes’. By combining distance information from the optical centres of both cameras, they provide enhanced depth perception capabilities.

RGBD cameras: These systems integrate depth sensors with monocular cameras, simultaneously providing colour (RGB) and depth (D) information per pixel. Primary depth sensors incorporated into RGBD cameras include infrared structured light sensors (calculating depth by projecting structured light patterns) and time-of-flight (ToF) sensors (measuring depth by timing light pulse round trips). Fusing depth maps with RGB images generates composite imagery containing both depth and colour information.

Event Cameras: Event cameras record pixel changes within an image, marking any alteration as an ‘event’. Environmental data is derived by analysing variations in pixel brightness.

Inertial Measurement Units (IMUs) Enable AMR Mobility

An IMU is an electronic system comprising a gyroscope (rotation sensor), accelerometer (motion sensor), and magnetometer (magnetic field sensor), enabling the AMR to track its own motion state and orientation. The IMU facilitates successful navigation within the environment and is typically integrated into the AMR's internal navigation system.

The combination of multiple sensors within the IMU allows the AMR to measure its own acceleration, angular velocity, and orientation, with reduced susceptibility to external interference. Among the parameters utilised by AMRs, acceleration and angular velocity are routinely measured quantities; conversely, magnetometer measurements are employed less frequently due to their relatively diminished accuracy in high-precision navigation tasks.

Ultrasonic sensors are multifunctional detection devices

Ultrasonic sensors utilise acoustic waves to measure distances, detecting nearby objects. Their operating principle involves emitting sound waves at frequencies exceeding 20kHz and detecting the echo signals reflected back to the receiver after striking an object. This enables the AMR to calculate the distance to the object and plan its path accordingly. Consequently, ultrasonic sensors are frequently employed for collision avoidance and are typically integrated into the AMR's sensor array alongside other object detection sensors, such as infrared sensors and LiDAR.

Ultrasonic sensors prove particularly valuable in congested environments demanding precise movement. Their ranging methods fall into two categories:

Reflective ranging: As described above, this object detection method serves AMR positioning and navigation, constituting the primary application of ultrasonic sensors in AMRs.

Unidirectional ranging: Employed for locating AMRs within specific zones, where transmitter and receiver are positioned separately. Upon receiving the transmitter's signal, the receiver measures the distance between them by analysing the time, phase, and acoustic vibration characteristics of the sound wave.

Infrared sensors detect objects under varying light conditions

Infrared sensors also serve for object detection, functioning as proximity sensors, motion detectors, and communication tools between AMRs. Their primary functions include detecting surrounding objects, measuring distances, and surveying the environment based on local thermal signals.

Infrared sensors emit a beam of infrared light—an electromagnetic wave situated between visible light and microwaves within the electromagnetic spectrum—while the receiver measures the beam's intensity. The further the beam travels from its source, the lower its intensity becomes. Leveraging this principle, infrared sensors can measure the distance between an AMR and an object, or the relative distance between two AMRs.

By employing infrared waves, these sensors detect both heat and light simultaneously, enabling AMRs to navigate complex environments and perform tasks under varying lighting conditions. However, unlike ultrasonic sensors, infrared sensors cannot be used for AMR positioning, as many obstacles block infrared signals, preventing them from covering the sufficient distance required for positioning along a typical A-to-B path.

LiDAR Sensors: Efficient and Precise Detection Systems

LiDAR sensors represent another category employed for object detection in AMRs. Utilising laser beams to measure distances between surrounding objects and the AMR, LiDAR constitutes a critical component of navigation systems. Within a LiDAR system, multiple laser beams are emitted from transmitters and reflected back to receivers upon striking objects.

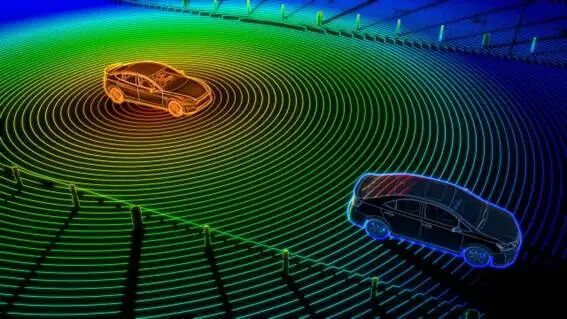

LiDAR employs Time-of-Flight (ToF) technology to measure the propagation distance of laser beams, consolidating information from all beams (originating from various transmitters) into point cloud data for a specific area. This aggregated data constructs a dynamic three-dimensional model of the local environment, enabling AMRs to navigate and identify objects with high precision and efficiency.

Figure. 1

LiDAR employs ToF to measure the distance travelled by laser beams, combining all beam information (from all transmitters) into a point cloud for a given area. (Source: Adobe Stock)

LiDAR models can be further enhanced with AI capabilities trained on point cloud data. AMRs equipped with such capabilities can handle more complex traffic scenarios in congested work environments.

Furthermore, LiDAR serves for AMR positioning due to its capability for high-precision long-range measurement. LiDAR positioning primarily determines the AMR's location via environmental mapping. Where no pre-existing environmental map exists, LiDAR-based Simultaneous Localisation and Mapping (SLAM) technology can survey the local environment to generate a LiDAR point cloud, from which the AMR's position is estimated.

Radio Frequency (RF) Technology Ensures AMR Positioning

While many sensors combine object detection and positioning functions, RF sensing technology is specifically dedicated to AMR positioning. In RF solutions, beacon stations are deployed around AMR-equipped facilities to transmit radio signals. Upon receiving these signals, the AMR determines its own location.

Unlike ultrasonic and infrared waves, radio waves can penetrate doors and walls, enabling AMR tracking throughout entire facilities rather than being confined to localised areas.

Two primary RF positioning methods are employed for AMRs:

Geometric-based positioning: Determines position by measuring distances between the AMR and beacon stations, or by calculating the angle of incidence of received signals. Common positioning algorithms include Time of Arrival (TOA), Time Difference of Arrival (TDOA), Received Signal Strength Indication (RSSI), and Angle of Arrival (AoA), which precisely measure the distance or signal angle between transmitter and receiver.

Fingerprinting: Compares received RF signals against a ‘fingerprint’ database containing estimated positions. This database is constructed by deploying RF sensor receiver stations at multiple sampling points throughout the area to collect RF signals and record their parameters. Once established, sensor stations continuously capture wireless signals and compare them against the database to determine the AMR's position.

Conclusion

AMRs typically integrate multiple sensors working in concert to ensure efficient and safe operation across diverse environments and conditions. They perform tasks without impeding human workers' activities. Indeed, without these sensors, AMRs would not exist, let alone other autonomous technologies.